Google Search Essentials gives the instructions to make your content more visible on search results.

It’s a simplified version of the old Google Webmaster Guidelines and offers a great indication for businesses who want to improve their SEO.

This guide will discuss the most important things you need to consider, and how to use them to improve your online strategy.

Contents:

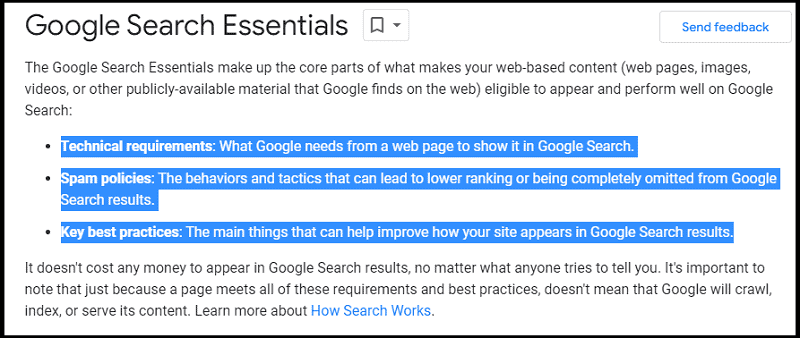

What Are Google Search Essentials?

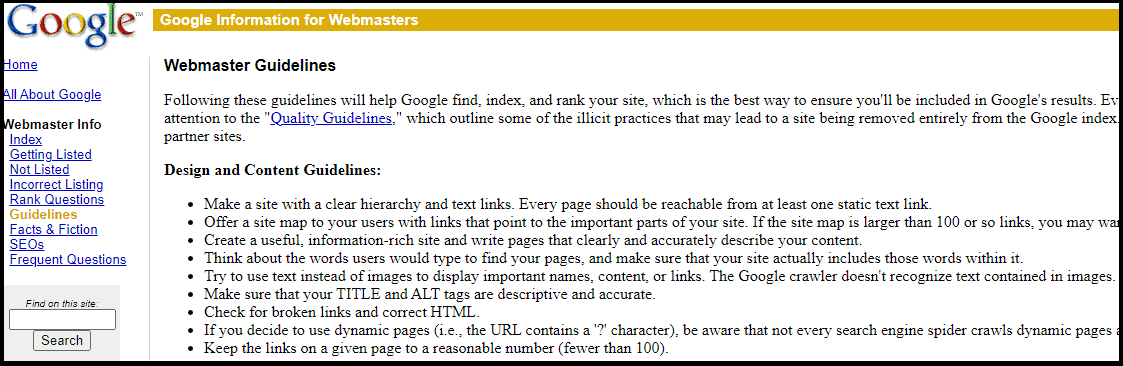

Google Search Essentials are the official new guidelines for site owners.

It is the simplified version of the Google Webmaster Guidelines that many businesses use across 20 years to improve their SEO.

So, when were they released?

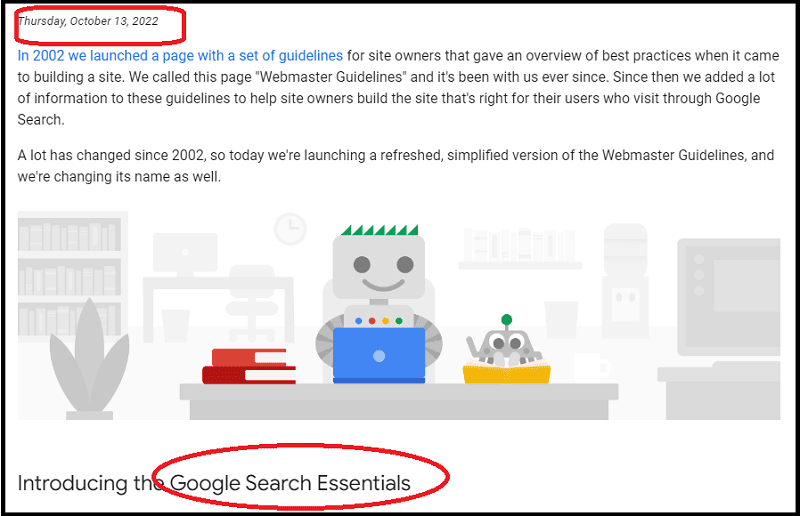

On October 13th, 2022, Google announced the rebranding of the Webmaster Guidelines as “Search Essentials.”

Not only did Google make the former Webmaster Guidelines easier to understand, but they also updated them to appeal to a wider audience.

Also, it has been removing the word “webmaster” from its services over time.

For instance, “Google Webmaster Central” was rebranded to “Google Search Central.”

But wait, let me tell you something.

The way site owners can optimize their content has changed over the years.

The tactics that used to be effective for SEO are no longer working as well as they used to.

Google Search Essentials Sections

The former Webmaster Guidelines have now been split into three main categories:

I’ll explore them in greater detail below.

The takeaway from this article is that nothing has changed much.

If you’re already familiar with the former Google Webmaster Guidelines, there’s no new material to learn.

But, if you want to refresh your knowledge or learn something new, keep reading!

Technical Requirements

According to Google’s statement, you don’t need to do much to your web page to get it into the Search.

In other words, you don’t need to try hard for your website to show up in the search engine.

Now, you might be wondering, what are these requirements?

These are the basic things:

- Googlebot isn’t blocked

- The page works (meaning that Google receives an HTTP 200 success status code)

- The page has indexable content

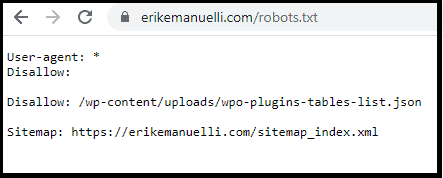

Googlebot Isn’t Blocked

If Googlebot can’t access your site, Google won’t be able to crawl it, and then it won’t show up in the SERP.

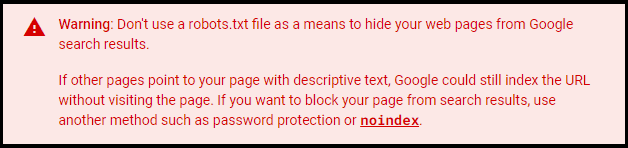

To check if it is being blocked, go to the robots.txt file of your website and look for the line that reads “User-agent: Googlebot.”

Here is an example:

The Page Works

Google will only list pages that return the HTTP 200 (success) status code.

If it receives any other status code, such as 404 (not found), it will consider the page to be unavailable and won’t probably list it in the search results.

The Page Has Indexable Content

For Google to understand your page, you need to include content that can be indexed.

The search engine bot understands certain types of text such as HTML and XML.

So, make sure your web pages have these codes included or Google won’t be able to crawl them.

Key Best Practices

There are a few key best practices to keep in mind when building content for your website, which will increase your chances of being found in search results.

Google has a few core best practices:

- Create helpful, people-first content

- Use keywords that people are likely to search for and place them in the most important areas of your page (including titles, headings, and alt text)

- Ensure your site links are crawlable

- Spread the word about your site.

- Make sure your images, video, structured data, or JavaScript placed in your content can be understood by Google.

- Improve your website’s search ranking visibility with rich snippets.

- Block content you don’t want to appear in search results.

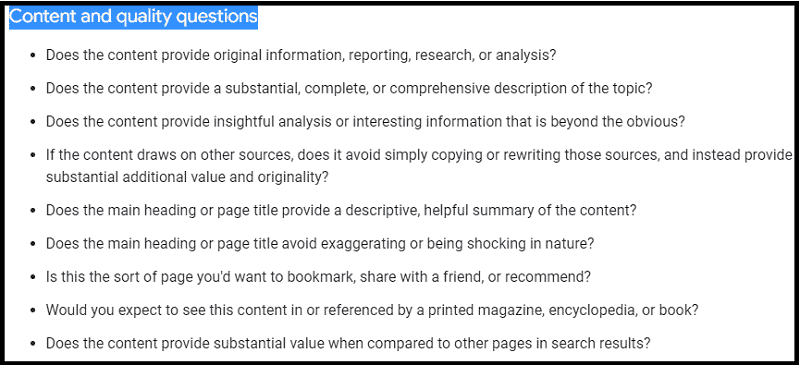

Create People-First Content

Google is all about ensuring the content that people see in search results is relevant.

So, it’s essential to create something valuable, with the user in mind.

Google recommends focusing on answering questions, providing accurate information, and writing content in a way that is easy to read and scan.

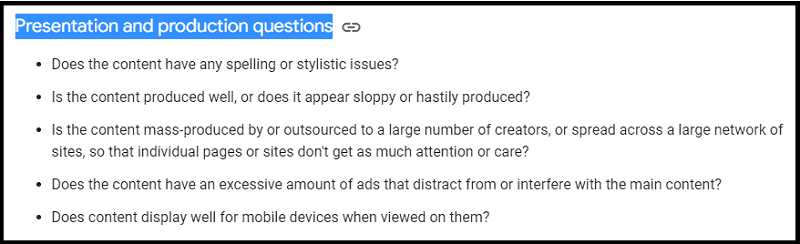

It also suggests to self-assess your content.

- Does my content provide value to the reader?

- Is it covering in-depth the topic?

- Does the title reflect the content?

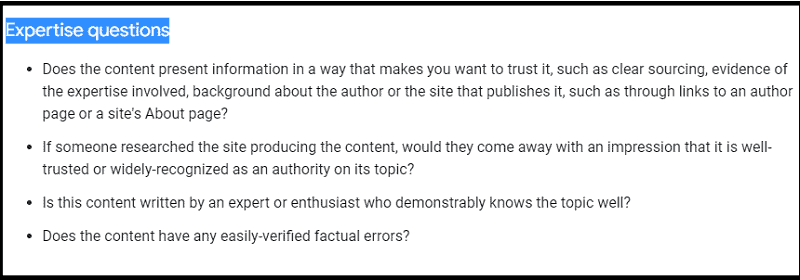

Also, you should prove that, as the author, you are an expert in your field.

Your expertise should be explained in detail on your About page.

Include references to reliable sources and be sure to quote them correctly.

Basically, you want to demonstrate (and over time, improve) your E.E.A.T.

E.E.A.T stands for Experience, Expertise, Authority, and Trustworthiness, and Google (including their search quality evaluators) uses it to measure content quality.

Working on it helps improve your visibility on search engines and other services, such as Google News or Google Discover.

Now, let’s dig a little deeper.

- Is my content free of spelling and grammar mistakes?

- Do my pages contain a lot of intrusive ads?

- Can the content be read and presented well on mobile?

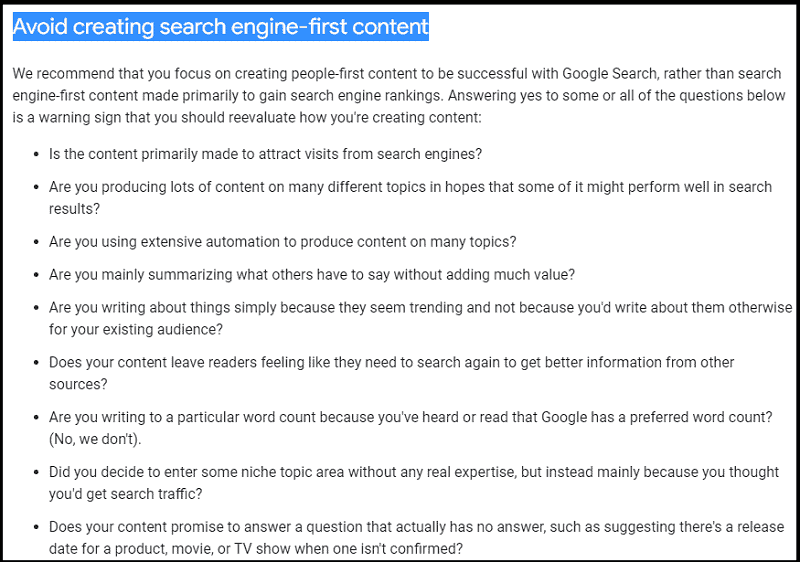

In short, you should focus on building people-first content and avoid writing just for search engines.

Use Keywords In Prominent Areas on Your Pages

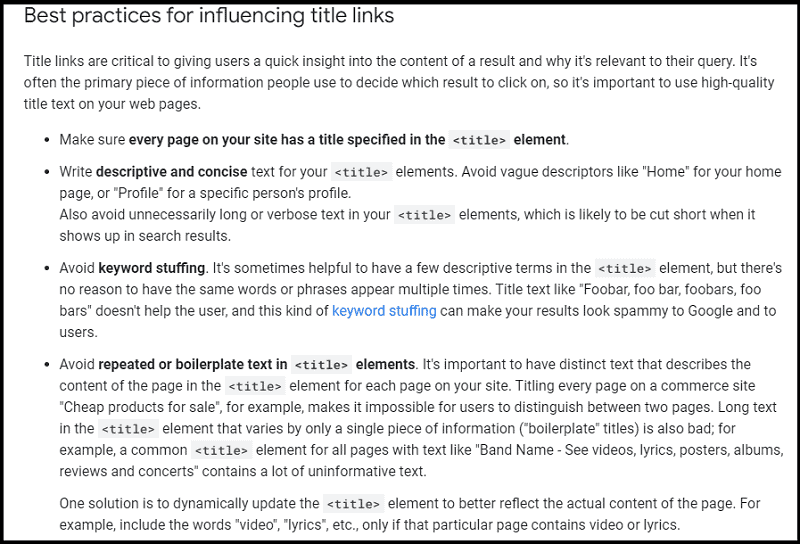

As Google looks for relevant keywords to match your content with the search queries, it’s important to use them in prominent areas such as titles, headings, and alt text.

Make sure to include the right words that people are likely searching for.

You can use your favorite tool (like Google’s Keyword Planner) to find relevant terms.

- LSI Keywords: How to Find and Use Them for Better SEO [Guide]

- Keyword Research: The Practical Guide You Need

- Long-Tail Keywords: How to Find Easy-to-Rank Terms

- Keyword Golden Ratio: How to Rank Fast on Google with KGR

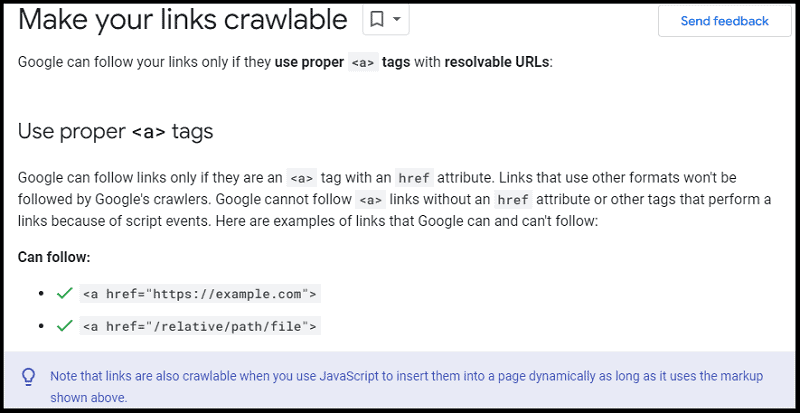

Ensure Your Site Links Are Crawlable

Google won’t be able to access your pages if the links on them are not crawlable.

Every page should have a link pointing to it from another page (to avoid orphan pages), so Google can discover and crawl it.

Also, check that Googlebot can properly view your website’s content by using Google’s robots.txt tester tool.

Spread The Word About Your Site

To spread the word about your website, you can optimize your content for social sharing and leverage Google My Business to help users better understand what you do.

You can also reach out to influencers and other related sites to create partnerships.

Follow Image SEO Best Practices

Google follows strict guidelines when it comes to images.

Make sure you optimize them for SEO by following these guidelines.

If you have supplementary content like videos, structured data, and JavaScript on your page, follow specific best practices for those materials so Google can understand them as well.

Leverage Rich Snippets

Rich snippets are an important part of SEO. They provide search engines and users with more information about the content on your page and help them understand what your website is all about.

Google supports several types of structured data (like articles, products, recipes, local businesses, or events).

Be sure to include this type of markup on your page so Google can understand it better and likely show your website in the search results with a rich snippet.

Block Only Specific Content

If you want to block Google from indexing specific pages or content on your website, use the robot meta tags.

Spam Policies

The spam policy section is one of the most important things for site owners.

Here, you’ll find what kinds of behaviors and tactics can help Google Search recognize your page or website as relevant.

The following are the topics covered (including three new ones added on the March 2024 core update):

- Cloaking

- Doorways

- Hacked Content

- Hidden Text and Links

- Keyword Stuffing

- Link Spam

- Machine-Generated Traffic

- Malware and Malicious Behaviors

- Misleading Functionality

- Scraped Content

- Sneaky Redirects

- Spammy Automatically-Generated Content

- Thin Affiliate Pages

- User-Generated Spam

- Copyright-Removal Requests

- Online Harassment Removals

- Scam and Fraud

- Expired Domain Abuse

- Scaled Content Abuse

- Site Reputation Abuse

Let’s see them in detail.

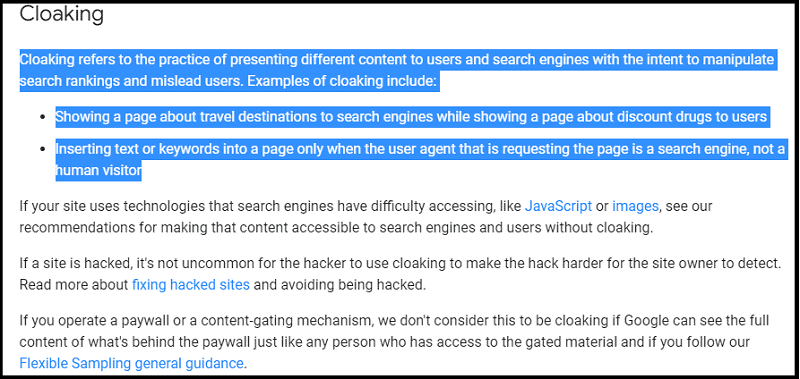

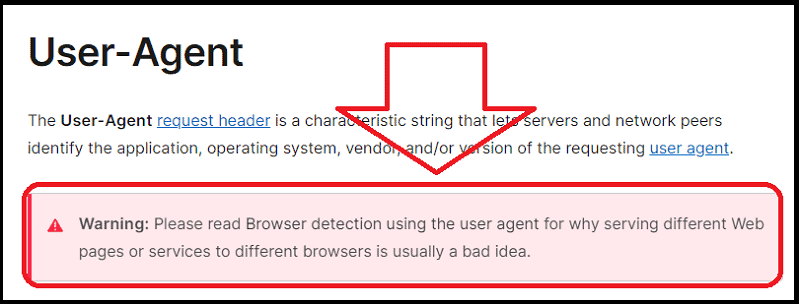

Cloaking

Cloaking happens when Googlebot sees different content than what a user sees, and Google considers it to be deceptive.

This can be done in a number of ways, but the most common method is to use IP addresses or the User-Agent HTTP header to determine which version of the page to serve.

For example, if a user has an IP address that is associated with a known search engine crawler, they may be served a different version of the page than a regular user.

Similarly, if a user has a User-Agent header that indicates they are using a mobile device, they may be served a mobile-optimized version of the page.

The main reason why people use cloaking is to try and fool search engines into thinking that their page is more relevant for a certain keyword than it actually is. This is done by serving a different version of the page to the search engine crawler than what is shown to regular users.

The goal is that the search engine will index the cloned page and give it a higher ranking than it would otherwise. Unfortunately, this technique generally does not work and can even get your site banned from Google if they detect that you are doing it.

Another common use for cloaking is to serve different versions of a page based on the country or language of the user. For example, if you have a website that sells shoes, you may want to serve a different version of the site to users in France than you do to users in Canada. This can be done using either IP address geolocation or the Accept-Language HTTP header.

Geolocation using IP addresses is not always accurate, but it can be good enough for determining which country a user is in. The Accept-Language header is generally more accurate but requires that the user’s browser is configured properly.

So it all adds up to this: cloaking is a black-hat technique and it’s against Google’s Search Essentials Policies.

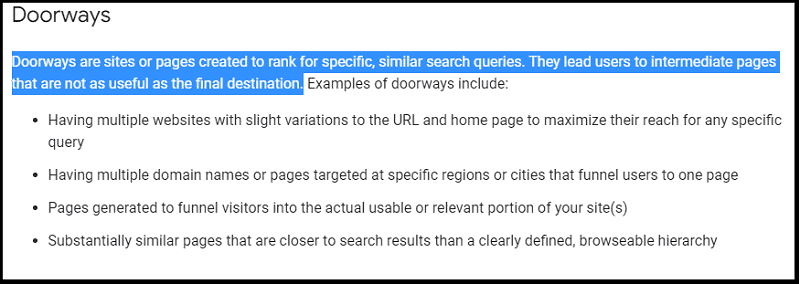

Doorways

Doorways are websites or pages created for search engines rather than users.

They are often used to funnel mobile search traffic to a company’s main website.

They can be created by automatically generating multiple landing pages that target specific keywords, or by duplicating existing content from the main website and modifying it slightly to target different keywords.

In either case, doorways are designed to rank better in search results for specific terms, usually at the expense of providing a poor user experience.

Hacked Content

Hacked content is any content on your website that has been placed or modified in a malicious way by an attacker.

This can include injecting malicious code into pages, adding hidden text and links, or redirecting users to other websites.

Google Search Essentials includes specific guidelines for handling hacked content, such as notifying Google when you discover a hack, taking steps to prevent further hacks, and restoring any content that was modified or removed.

Hidden Text and Links

Hidden text and links are techniques used to try and manipulate search rankings by providing Googlebot with content that regular users cannot see.

This can be done by using CSS or JavaScript to hide text or links or placing them off-screen so they are not visible on the page.

Another example is using an image to hide the text. There is also one more way to make text invisible on a web page: setting the font size and the opacity to zero.

Google does not allow all this kind of manipulation and advises site owners to remove any hidden content or links that are discovered on their websites.

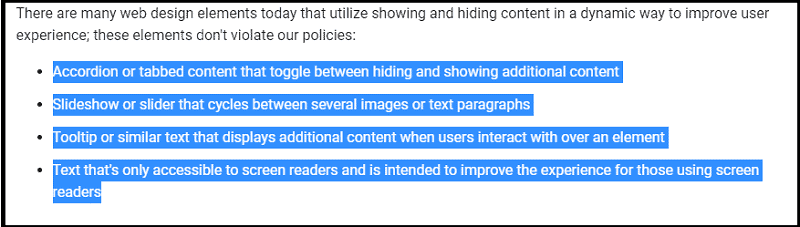

However, many dynamic web design elements show and hide content to improve user experience, and they don’t violate Google’s policy:

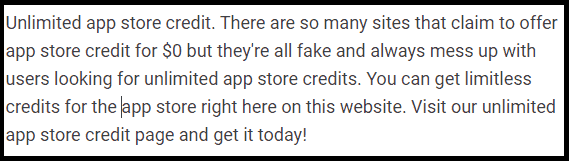

Keyword Stuffing

Keyword stuffing is the practice of cramming a page with as many keywords as possible, in an effort to increase its search rankings.

This often involves repeating the same term or phrase over and over again or using irrelevant words just to manipulate rankings.

This is a no-no.

In fact, Google’s Search Essentials reports a funny example of keyword stuffing:

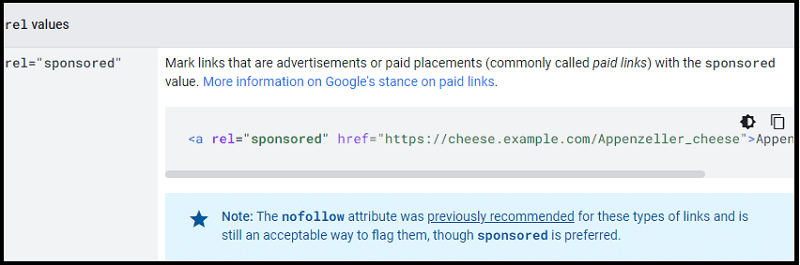

Link Spam

Link spam is typically low-quality links that are included in someone’s content in an attempt to game the system and improve their search engine rankings.

In other words, this is a form of black hat SEO. And it’s something that you should not be involved in.

Some examples of link spam include:

- Buying or selling links to manipulate rankings (such as exchanging links for money, products, or services)

- Excessive link exchange

- Automated programs or services that build links to your website

- Low-quality directories

However, Google agrees that buying and selling links is a part of the internet economy for advertising and sponsorship.

And as long as these types of links are marked with rel=”nofollow” or rel=”sponsored” attributes, there is no policy violation.

Machine-Generated Traffic

Machine-generated traffic is the result of scripts or bots that automatically and repeatedly access a website in an attempt to artificially boost page views.

This type of traffic can be difficult to detect and block, as it often mimics legitimate human activity.

In some cases, this practice may be used to scrape content from a website or to launch cyberattacks such as Distributed Denial Of Service (DDoS).

So, it is important to monitor your website for suspicious activity that could indicate this kind of issue.

To avoid it, you can use tools such as Google Analytics, firewall logs, and network monitoring software to detect and block malicious bots.

Google’s Search Essentials is against this practice, as it can lead to inaccurate metrics relating to user engagement and can also negatively impact search results.

It also violates the Google Terms of Service.

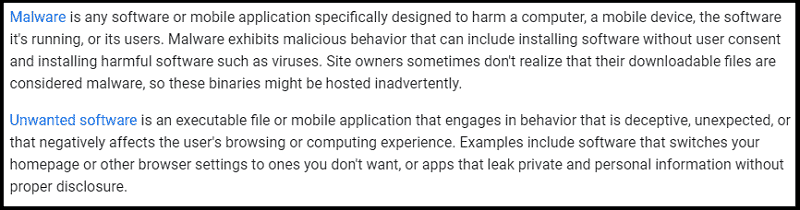

Malware and Malicious Behaviors

Malware is any software that is created with the intent of harming a system or stealing sensitive data.

Google advises website owners to be vigilant in detecting malware, as it can lead to website penalization.

Google also warns against other bad behaviors such as phishing and click fraud, both of which are illegal activities aimed at deceiving users and luring them into providing personal information or clicking on links that may contain malicious code. Google’s policy prohibits any behavior that attempts to deceive its users.

Additionally, Google advises site owners to regularly audit their websites for any suspicious content and links that could be used to spread malware or other malicious activities.

To avoid potential penalties, site owners should also ensure that all third-party software installed on the website is kept up-to-date with the latest security patches.

Finally, it is recommended to review the Unwanted Software Policy.

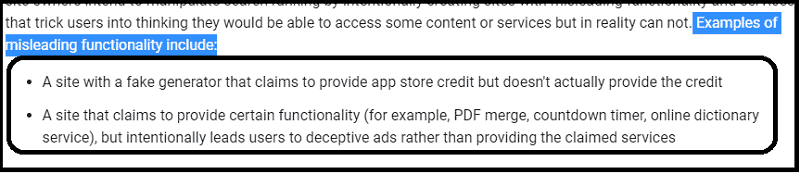

Misleading Functionality

Misleading functionality describes any feature that misleads users. This includes features such as pop-ups or redirects that take them to a page they did not intend to visit.

Examples of misleading functionality include:

- Pop-ups or redirects that take users away from the original page they intended to visit

- Links disguised as buttons or images that do not lead to the expected content

- Use of dark patterns to trick users into taking an action they didn’t intend

- Automatically opening new tabs or windows

In a nutshell, Google’s Search Essentials prohibits any deceptive design elements that are designed to simulate the look and feel of native application installations.

This includes links that prompt users with a request to download software in order to progress further on the page.

Such requests should always include an appropriate warning about what is being installed and provide clear instructions on how to cancel the download if desired.

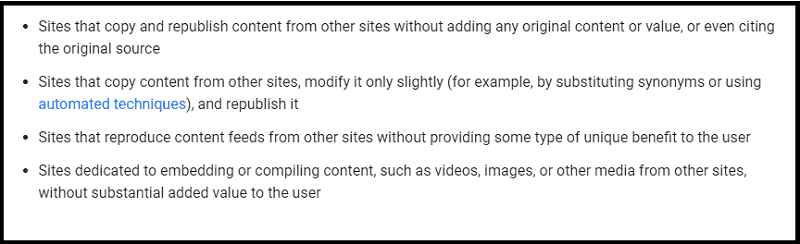

Scraped Content

Some webmasters base their entire sites upon content plagiarized from other, more credible sources.

Google prohibits this practice and recommends creating original content.

They also advise against copying text, images, or video from other websites without proper authorization.

A website may be demoted if it has received a significant number of valid legal removal requests.

Some examples of scraped content are:

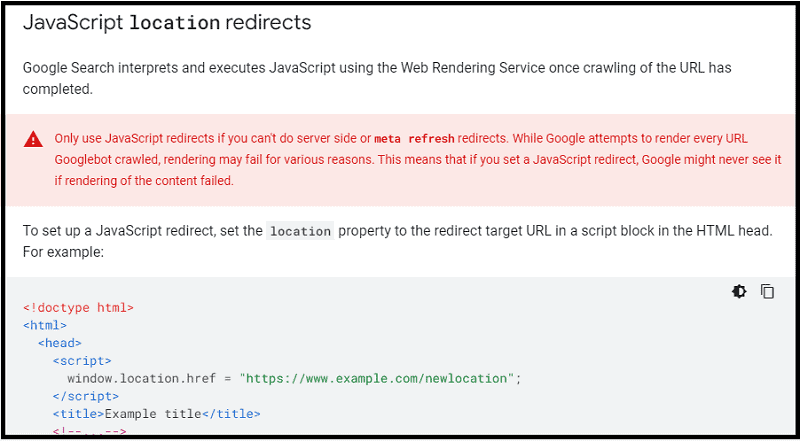

Sneaky Redirects

Sneaky redirects are a type of malicious behavior that redirects users to another page without their knowledge or consent.

Google’s Search Essentials prohibits any attempts to deceive its users and advises website owners to avoid using such tactics, as they can lead to penalties.

To help prevent sneaky redirects, you can use the rel=”noreferrer” link attribute to clearly indicate that the page is being redirected.

Google also recommends regularly auditing your website for any hidden malicious code or links that could be used to spread malware or redirect users without their knowledge.

Redirects can often be perceived as sneaky. However, consider the user’s intention when creating a redirect to another page.

To learn more about how to use redirections on your site you can visit Google Search Central.

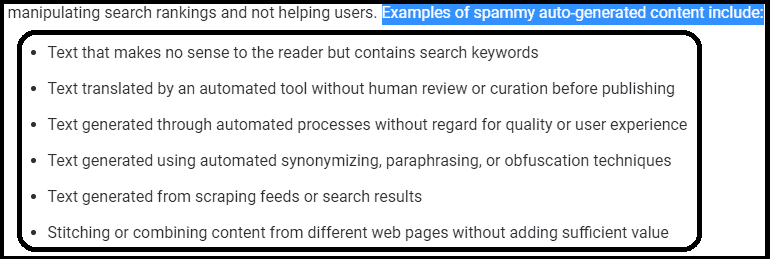

Spammy Automatically-Generated Content

Google recommends avoiding generating content automatically and warns that any sites that contain spammy or low-quality content may be penalized.

Examples of auto-generated content include:

- Content generated from templates

- Articles created through automated “spinning” programs

- Duplicate pages with altered keywords

Thin Affiliate Pages

Thin affiliate pages are websites that contain only a few sentences or so, with the majority of their content consisting of links to other sites.

Google considers this type of page to be deceptive and will probably penalize any sites that use them.

The Search Essentials documentation recommends that site owners focus on creating quality content instead, as these types of pages are rarely useful for people.

User-Generated Spam

User-generated spam is any type of content posted by users on your website that is considered to be low quality.

Google recommends that you regularly monitor and delete any such content.

Examples of user-generated spam include:

- Low-quality links posted on blog comments or forums

- Comments unrelated to the topic at hand

And in case you might be asking yourself, here’s how to optimize WordPress comments.

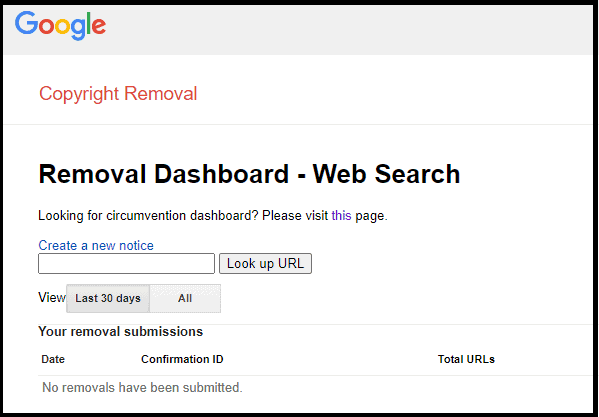

Copyright-Removal Requests

Google advises website owners to respect the copyrights of others and refrain from posting any content without permission.

Google will respond to copyright removal requests, which can result in a website being demoted if it has received a significant number of valid legal removal requests.

To avoid being penalized make sure that you are not infringing on someone else’s intellectual property rights.

And here’s the Google Removal Dashboard, in case you need to report someone stealing your content.

Online Harassment Removals

Google advises website owners to take steps to ensure that their sites are not used for online harassment.

Google will respond to requests for the removal of content that incites violence, promotes hate speech, and/or harasses individuals or groups.

It is recommended that site owners regularly monitor their websites for any such content and remove it promptly to avoid being penalized.

Scam and Fraud

Google also advises taking steps to ensure that your site is not used for fraudulent purposes or scams.

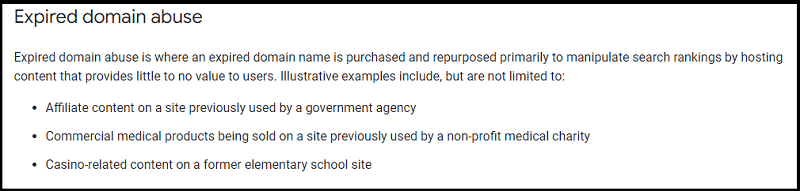

Expired Domain Abuse

Expired domain abuse is when someone purchases an expired domain and fills it with low-quality content or spam to boost its search rankings. Or, if redirected to another website to take advantage of old-earned backlinks.

Google recommends ensuring that the content on your site is relevant and useful, as well as monitoring any links pointing to your site from expired domains to ensure they are not being used for malicious purposes.

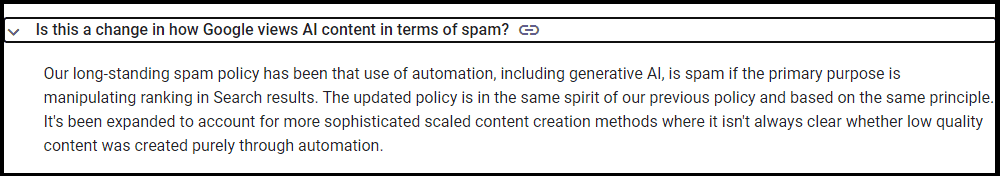

Scaled Content Abuse

Thanks to AI and machine learning, creating content at scale has become easier than ever. However, this can also lead to scaled content abuse where low-quality or irrelevant content is generated in large quantities.

Google recommends that site owners focus on creating helpful, relevant content instead of trying to cut corners by using automated tools to generate large amounts of content.

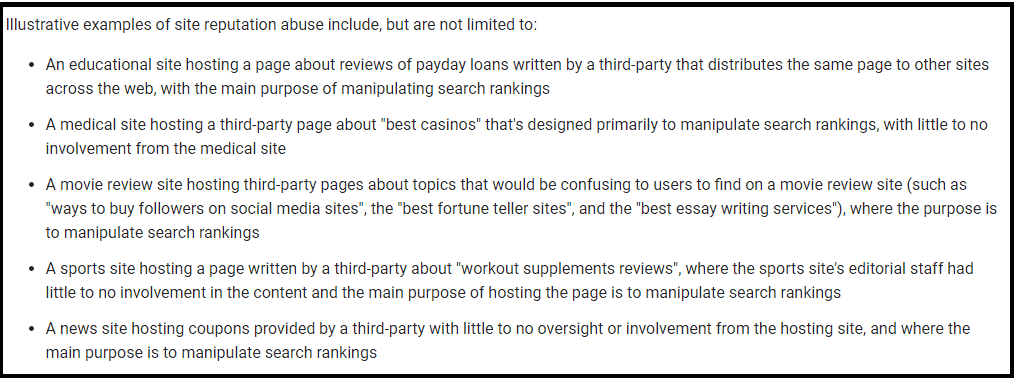

Site Reputation Abuse

Site reputation abuse is when content is published on authoritative or reputable sites with the intent of manipulating search rankings.

Google advises against using any tactics that aim to manipulate a website’s reputation and encourages site owners to focus on creating quality content instead.

This is often called “SEO parasite” or “parasite hosting”, and it can result in penalties for both the parasite site and the site being linked from.

Before You Go

Google Search Essentials is just a part of the online marketing game.

Or, why don’t you take a look at these?

And remember, in the end, it all comes up to this:

SEO and user experience go hand-in-hand if you want to create a successful website.Click To TweetAnd you?

What do you think?

Please share your views in the comments below, thanks!

Hello Erik,

Great Post. Search Engine essentials are important to optimize your content and blog to rank well in search engines. You have elaborated on everything in detail. Thanks for sharing it with us.

Regards,

Vishwajeet Kumar

Hey Vishwajeet,

it’s good to read and understand all those Search Essentials guidelines from Google. It’s the basic of search engine optimization and content creation.

Thanks for your visit and comment.